How to Build a Production-Ready RAG System with Node.js and OpenAI: A Comprehensive Guide for SaaS Founders

Discover how to build a scalable RAG system using Node.js and OpenAI. Learn the architecture, vector databases, and implementation steps for AI startups and SaaS founders.

The honeymoon phase with basic Large Language Models (LLMs) is officially over. For AI startups and SaaS founders, simply slapping a ChatGPT wrapper onto a product no longer provides a competitive edge. Your users demand more: they want AI that understands their specific business context, their private documentation, and their historical data—all without hallucinating or leaking sensitive information.

This is where Retrieval-Augmented Generation (RAG) comes into play. RAG is the bridge between a static model and dynamic, proprietary data. By grounding your AI responses in specific, retrieved facts, you solve the twin problems of outdated training data and imaginative inaccuracies.

But how do you move from a Python notebook prototype to a scalable, production-grade system? For many enterprise environments, Node.js is the answer. Its non-blocking I/O, massive package ecosystem, and ubiquity in the modern SaaS stack make it a powerhouse for building fast, reliable AI middleware.

In this guide, we will break down the RAG architecture from the perspective of a founder building for scale. We'll explore the tech stack, the implementation steps, and the architectural trade-offs you must navigate to turn your data into a strategic asset.

TL;DR: Building RAG for Startups

- RAG Strategy: Grounding OpenAI models in your private data to eliminate hallucinations.

- Tech Stack: Leveraging Node.js, OpenAI Embeddings, and high-performance Vector Databases (like Pinecone or Weaviate).

- Efficiency: Why Node.js is superior for the orchestration layer in high-traffic SaaS applications.

- The Workflow: Ingesting data, generating vector embeddings, and performing semantic search.

- SaaS Growth: Using RAG to reduce support costs and increase product stickiness through deep context awareness.

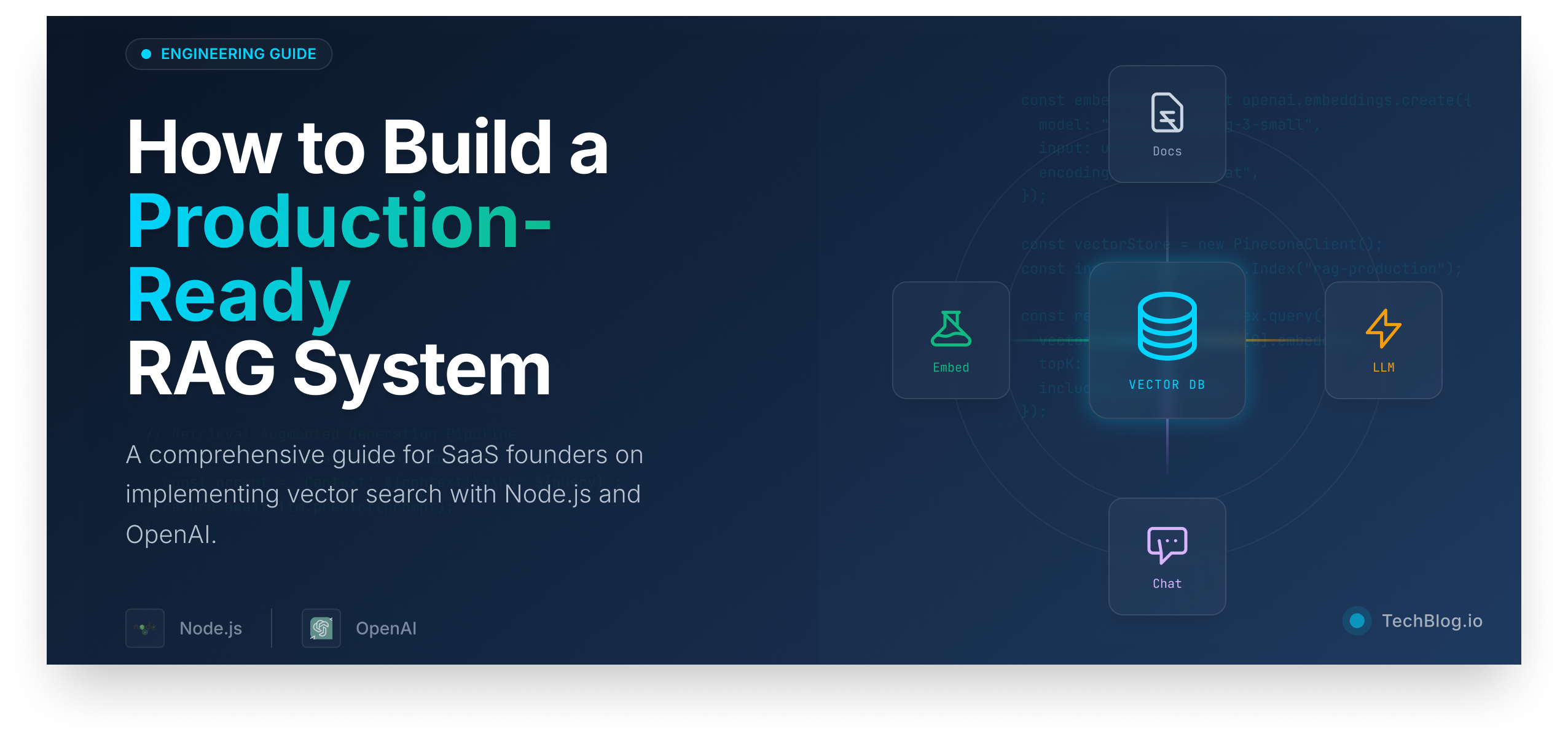

Understanding the RAG Architecture for Modern SaaS

At its core, RAG is about adding a "memory" to your LLM. Standard models like GPT-4 are trained on a snapshot of the public internet. They don't know about your user's CRM data, your latest API documentation, or your internal Slack conversations. RAG architecture solves this by retrieving relevant document snippets before sending a prompt to the AI.

The Core Components

To build a robust system, you need four primary layers:

- Data Ingestion Layer: Scrapers, PDF parsers, and API connectors that pull your source data.

- Vectorization (Embeddings): Converting text into numerical vectors using OpenAI’s

text-embedding-3-smallorlargemodels. - Vector Database: A specialized database designed to store and search these high-dimensional vectors (e.g., Pinecone, Supabase pgvector, or Milvus).

- The Orchestrator (Node.js): The logic that handles user queries, coordinates retrieval, and constructs the final prompt for the LLM.

Why Node.js is the Secret Weapon for RAG

While the data science community loves Python, the SaaS community thrives on Node.js. Building your RAG system in Node.js offers several distinct advantages for startups:

Non-Blocking Scalability

RAG involves multiple asynchronous calls—one to the database, one to the embedding model, and one to the LLM. Node's event loop handles these concurrent I/O operations far more efficiently than standard synchronous frameworks, reducing latency for your end users.

Full-Stack Consistency

If your front-end is React or Vue, keeping your AI logic in TypeScript/Node.js allows for shared types, easier code reviews, and a more streamlined CI/CD pipeline. SaaS founders can leverage their existing engineering talent without needing to hire a separate specialized AI team.

How to Build a RAG System: A Step-by-Step Implementation

Building a RAG system involves more than just a single API call. Here is the tactical roadmap for implementation using Node.js and OpenAI.

Step 1: Data Preparation and Chunking

You cannot pass a 500-page PDF to OpenAI in a single prompt. You must break the data into smaller, manageable pieces called chunks. Aim for 500-1000 tokens per chunk. Ensure you include an "overlap" (e.g., 10-15%) between chunks so that context isn't lost at the break points.

Step 2: Generating Embeddings

Once your text is chunked, you send it to the OpenAI Embeddings API. This converts the text into a vector of numbers (like 1536 dimensions). These numbers represent the semantic meaning of the text.

RAG vs. Fine-Tuning: Which Should You Choose?

In 90% of SaaS use cases, RAG is superior to fine-tuning.

| Feature | Fine-Tuning | RAG (Retrieval-Augmented) |

|---|---|---|

| Data Recency | Static (requires retraining) | Real-time (just update the DB) |

| Hallucination Risk | Higher (model relies on memory) | Lower (grounded in context) |

| Cost | High (compute + data prep) | Low (DB storage + API calls) |

| Transparency | Black box | Can provide source citations |

Frequently Asked Questions

Costs scale with usage. Major expenses include OpenAI embedding tokens (very cheap), Vector Database storage (starts at $0-$70/month for managed versions), and GPT-4 prompt tokens. For most startups, RAG is significantly cheaper than human support teams or custom model training.

Conclusion: Future-Proofing Your AI Startup

Building a RAG system using Node.js and OpenAI provides the scalability, accuracy, and security that enterprise clients demand. As the AI landscape continues to evolve, the founders who succeed will be those who focus on context.

Scale Your AI Implementation Today

Building a production-ready RAG system involves complex decisions regarding data privacy and latency optimization. Don't waste months on trial and error.